..or “Why something as simple as a space can break things”

Since early November, we’ve been getting hundreds of errors a day as a result of redirecting traffic to our sister website in the UK. The timing corresponded to them releasing a new version of their website, but they were unhelpful in trying to determine the cause of the error.

The error was: System.Net.WebException: The server committed a protocol violation. Section=ResponseHeader Detail=CR must be followed by LF.

Every article on the subject indicated that the problem was on the server side not adhering to the HTTP 1.1 protocol properly, but it was being caused because the default behavior of your .net application and how it handled the error.

There appeared to be three possible solutions:

- Get the other developers to fix their website. Of course you know this unlikely to happen with a 3rd party unless you have a relationship with them.

- Suppress the error in your application by changing useUnsafeHeaderParsing =”true” in your web.config. This isn’t a great solution because it effects the entirely application and may expose you to other issues.

- Use reflection to programmatically set your config settings. Here is a good thread on Stack Overflow that showcases solutions #2 and #3. While this would solve the problem, our internal discussion decided that we shouldn’t have to add code (nor did we have the time) in order to deal with a problem on their end.

The majority of the articles tackled the problem at face value. The error says that the response needs to be terminated with a CRLF, but only had a CR.

Mehdi El Gueddari has a great article on how he went through a variety of steps to debug this exact problem.

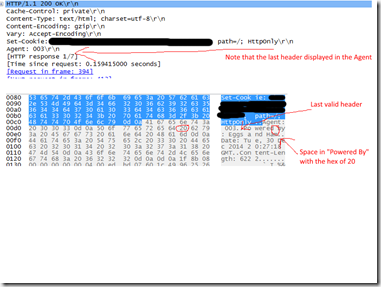

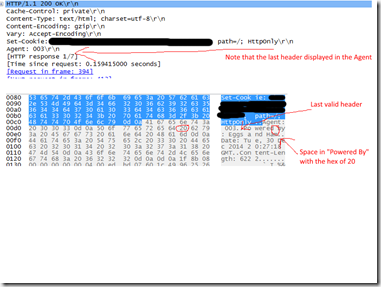

I tried those same steps myself and brought in one of our Senior developers. Interestingly, we experienced the same problem with Fiddler “fixing” what was a bad response header. All of the headers were displayed, so nothing initially jumped out as the problem. However, we did see the UK was inserting a custom header for a “Powered By:” key, and focused our effort there. The value was “Eggs and Ham”.

For security reasons, changing your response headers can be a good thing, and we believed that the UK made the change for that reason (and for a little humor).

Mehdi’s article put us on the right track, but it was his Attempt #4: Wireshark that finally helped us identify the true problem. In his case, he was looking for the missing CRLF (0D 0A hex).

When we used Wireshark, the hex view showed that the headers had the required CRLF but in the preview not all of the headers were being decoded properly. This was the Aha! moment for us and full circle back to that custom header we saw in Fiddler.

The problem was that the key was called “Powered By” and not “PoweredBy” or “Powered-By”. Having a space is not valid in an HTTP response header key.

Fiddler showed the key, but it automatically fixed the problem and displayed ALL of the headers, so it wasn’t obvious.

WireShark on the other hand was unable to parse the header response because of that space, and we didn’t see the remaining headers (including valid ones like “content-length” and “date”)

Now that it was identified what the true problem was, we were able to go back to the UK team with our findings. Presented with these details, they were able to quickly make the change on their end to prevent future errors.